To describe a Markov chain, we need one extra element that’s missing in the definition of finite-state machines: a distribution of probabilities that maps each state to each state, including the original state itself. A stochastic process is a process where the transition from the current state to the next is determined not only by the current state of the system, but also by an element of surprise or randomness that require a probabilistic description as opposed to a deterministic one. Interestingly, even though finite-state machines were conceptual tools used to model the process of a simple mechanical computation, they can now be used to simulate and facilitate the design of much more sophisticated and modern architectures of computers.Ī Markov chain is a model that we can use to describe stochastic time-dependent processes. Much more complex finite-state machines exist, and the domain of applicability is the primary determinant of the size of the state space and the state transition function. If the set of states is finite, the last state in the set would need to map to some other state or to itself, or the state transition function would not hold. For example, they could associate each state in the state space to the next. More complex machines can have more sophisticated state transitions. This state machine does, today and for all eternity, absolutely nothing! We can imagine observing a machine with this rule in regular intervals, and we would observe that if we know the state at any given time, we also know its state at any time in the future. The simplest state transition function is the one that associates every state with itself. Technically, the formal definition includes an alphabet of symbols, but this can be omitted for simplification purposes. If is the symbol that indicates the state transition function, then we can think of as a function that maps the state space onto itself.

#Finite state automata how to#

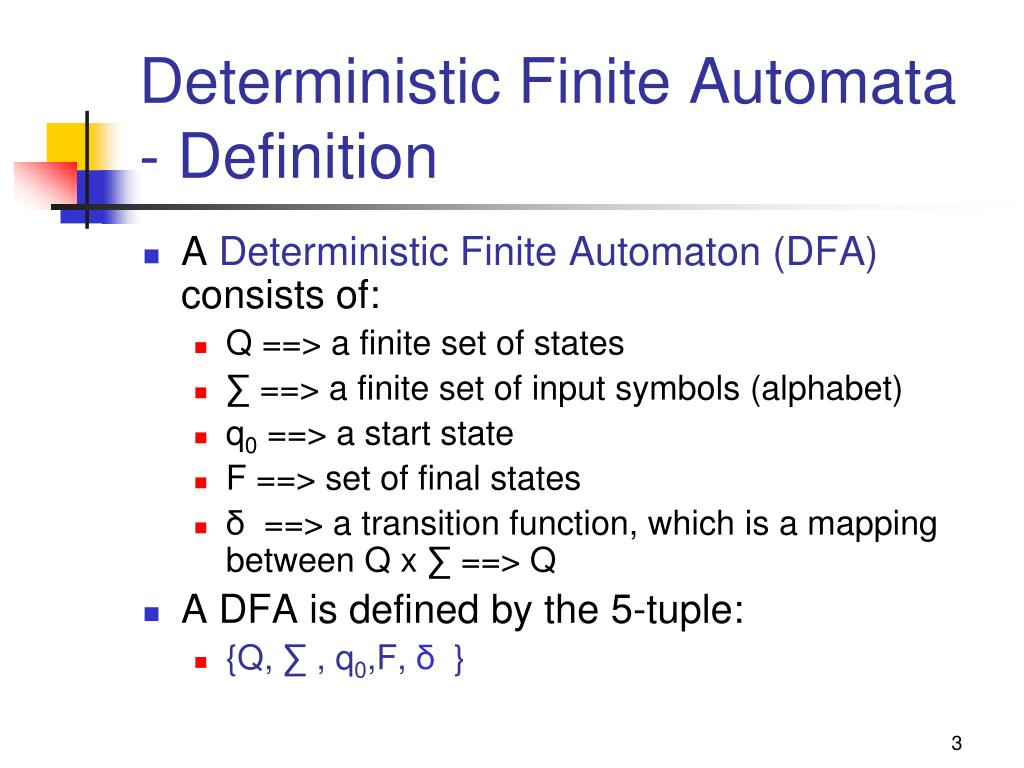

In this sense, we can consider the set of rules as a function of state transition: in fact, this function tells us how to associate any of the possible states in the state space with another. This set of rules comprises a set of operations associated with each of the possible states of a machine and assigns to them a corresponding output state.

The second component of a finite-state machine is a set of rules that indicate the transition between states.

It’s common to refer to individual states with the letter and a sequential natural number in subscript, so that indicates the state number in a collection of possible states. The set of states associated with a particular process takes the name of state space. If we cannot find any such features to discriminate between states, by the principle of identity of indiscernible, then the two states are the same. It makes sense to treat the possible configurations of water as a collection of three states, and not to distinguish further or finer, because we cannot identify any discernible features that allow us to further subset the states into micro-states. Water, in fact, can assume one of three possible states according to the temperature at which we heat it (or respectively, cool it): The typical example, in this sense, is that of water. Certain physical processes lead objects to change configuration, shape, or form and assume one of a finite number of configurations, shapes, or forms which we can track over time.

0 kommentar(er)

0 kommentar(er)